The terms “Big Data” and “Cloud Computing” have probably appeared. You might even have used them if you work in developing cloud applications. Many public cloud services perform big DC in analytics, so the two go hand in hand.

Keeping up with cloud infrastructure best practices and the kinds of data stored in large quantities is essential given the growing popularity of Software as a Service (SaaS). We’ll examine the differences between big data and cloud computing, their interrelationship, and why the two are a perfect match for producing a variety of cutting-edge technologies, including artificial intelligence.

What is Big Data in the Cloud?

Big data and cloud computing are two distinctly different ideas, but the two concepts have become so interwoven that they are almost inseparable. It’s essential to define the two ideas and see how they relate. Furthermore, once a company invests in big data, it is only helpful to the company while it is running; when it is not in use, it is useless. Data requirements have long limited the technology’s use to the most extensive and well-funded businesses. It is an area where cloud computing has made significant progress.

You may also like to read about – Importance of Big Data in Healthcare

What is Big Data?

Massive amounts of data, whether structured, semi-structured, or unstructured, are referred to as big data. It revolves around analytics, typically derived from various sources like user input, IoT sensors, and sales data. Big data in the cloud also describes analyzing massive amounts of data to answer a question or spot a pattern or trend. A set of mathematical algorithms is used to analyze data. The algorithms depend on the meaning of the data, the number of sources involved, and the purpose of the analysis for the business. To divide and organize such complex analytics, distributed computing software platforms like Apache Hadoop, Databricks, and Cloudera are used. The amount of computing and networking infrastructure required to construct an extensive data facility is the issue with big DC services. The cost of servers, storage, dedicated networks, and the software expertise needed to set up a thriving distributed computing environment can be high.

What is Cloud?

On-demand computing resources and services are offered by cloud computing. Users can quickly assemble the required infrastructure of cloud-based compute instances and storage resources, connect cloud services, upload data sets, and carry out cloud-based analyses. In the public Cloud, users can access unlimited resources, use them for however long they need, and then terminate the environment while only being charged for the resources and services used. Big data in the cloud has found its perfect platform in the public Cloud. A business can use resources and services from a cloud on demand without building, owning, or maintaining the infrastructure itself. Big data analytics cloud technologies are thus made available and affordable to almost any enterprise, thanks to the Cloud.

Advantages of using Big Data in the Cloud

Businesses of all sizes gain a variety of significant advantages from the Cloud. The following are some of the most significant and immediate advantages of big data in the Cloud.

- Scalability – A typical business data centre doesn’t have enough room, power, cooling, or money to buy and deploy the massive amount of hardware necessary to create an extensive data infrastructure. In contrast, a public cloud oversees tens of thousands of servers dispersed across a network of data centres worldwide. Consumers can assemble the infrastructure for a big data project of almost any size because the necessary hardware and software services are already available.

- Agility – Big data initiatives vary widely. One project might require 100 servers, while another might call for 2,000 servers. Users who use the Cloud can use as many resources as necessary to complete a task and then release those resources afterwards.

- Cost – A company’s data centre is a significant capital expenditure. Businesses must spend money on expenses such as facilities, power, routine maintenance, and more, in addition to the hardware. The Cloud accounts for all those expenses in a flexible rental model where resources and services are pay-per-use and offered on demand.

- Accessibility – Many clouds offer a global footprint, allowing resources and services to be deployed in most of the world’s major regions. It makes it possible for data processing and activity to happen close to where the big data task cloud solutions are located. In contrast to incurring the cost of moving that data to another region, it is relatively easy to implement the resources and services for a big data project in that particular cloud region if a significant amount of data is stored there.

- Resilience – The real value of big data projects lies in the data, and the dependability of data storage is a benefit of cloud resilience. Clouds replicate data as a matter of course to maintain high availability of storage resources, and even more robust storage options are accessible in the Cloud.

Disadvantages of using Big Data in Cloud Computing

Big DC storage use cases have demonstrated the value of public clouds and numerous third-party extensive data services. Businesses must take into account some of the potential drawbacks despite the advantages. Big data in the Cloud can have the following significant drawbacks.

- Network dependence – Utilizing the Cloud requires seamless connectivity from the LAN to the cloud provider’s network and across the internet. Outages along that network path may cause either increased latency or total cloud inaccessibility in the worst-case scenario. The impact of outages should still be considered in any prominent data use of the Cloud, even though they might not have the same effects on a big data project as they would on a mission-critical workload.

- Storage costs – Big data projects may incur high long-term costs due to cloud data storage. The three main problems are data migration, storage, and retention. Large amounts of data must be loaded slowly into the Cloud, and there is a monthly charge for those storage instances. Additional charges might apply if the data needs to be moved again. Additionally, because big data cloud services sets are frequently time-sensitive, some data may not be helpful for an extensive data analysis even hours from now. Businesses must implement thorough data retention and deletion policies to control cloud storage costs associated with big data in the cloud because keeping unnecessary data around costs money.

- Security – Big data projects may use confidential or personally identifiable information subject to data protection laws and other private or public sector rules. Users of the Cloud must take the necessary precautions to maintain security in cloud computing and storage, including proper authentication and authorization, encryption for data in transit and at rest, and extensive logging of their data access and use.

- Lack of standardization – The design, implementation, and management of a significant data deployment in the Cloud cannot be done singly. It may result in subpar performance and expose the company to security risks. Significant data architecture, as well as any policies and procedures about its use, should be documented by business users. The documentation can serve as a base for future optimizations and improvements.

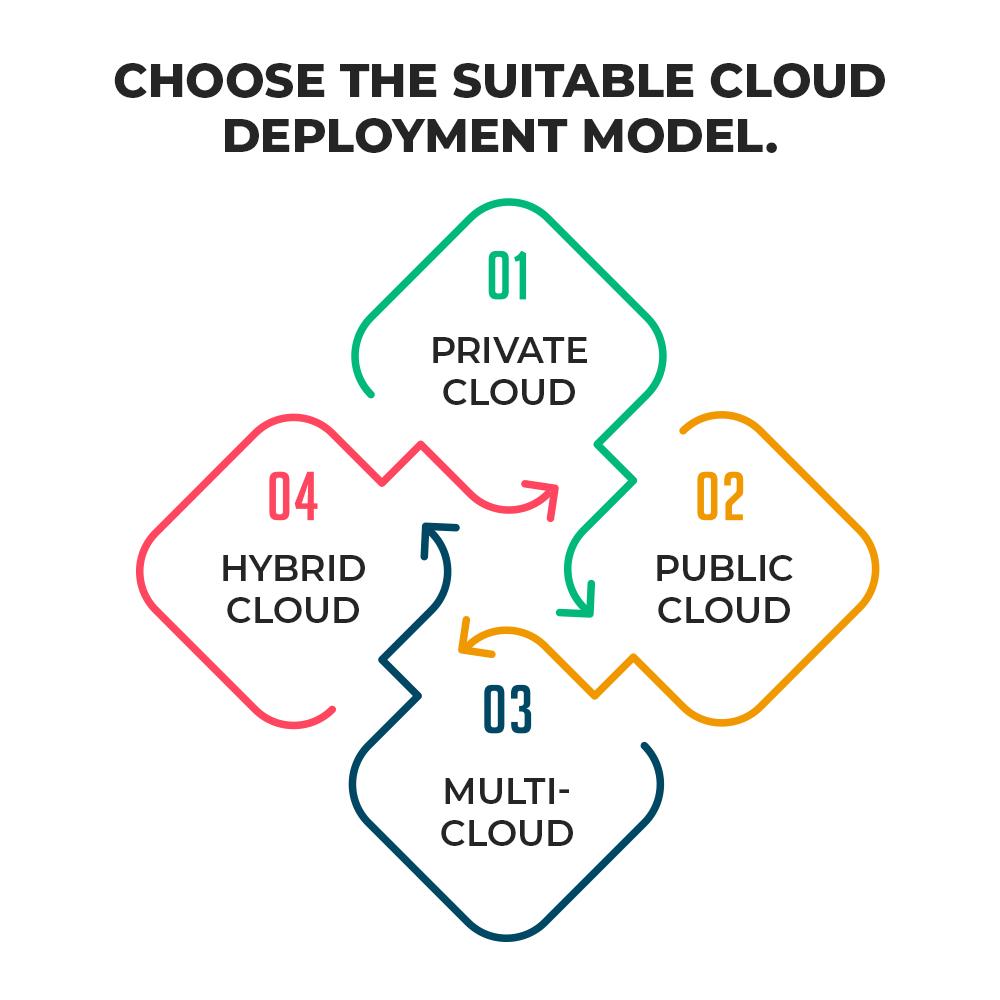

Choose the suitable cloud deployment model.

Which cloud deployment model is, therefore, best for big DC solutions? The four cloud models typically available to organizations are public, private, hybrid, and multi-cloud. Understanding each model’s characteristics and tradeoffs is crucial.

Private Cloud

Businesses can frequently take control of their cloud environment with private clouds to meet particular regulatory, security, or availability requirements. However, it is more expensive because a business must own and run the entire infrastructure. Therefore, sensitive small-scale big data projects might be the only ones that use a private cloud.

Public Cloud

The public Cloud is the best option for almost any size of significant data deployment due to its scalability and availability of resources on demand. However, users of public clouds must control the resources and services they access. In a shared responsibility model, the public cloud provider manages cloud security while users are responsible for configuring and managing cloud security

Hybrid Cloud

Using a hybrid cloud is advantageous when sharing particular resources. A hybrid cloud, for instance, might allow ample data storage in the neighbourhood private cloud, effectively keeping data sets local and secure, and use the public Cloud for computing resources and big data analytical services. On the other hand, hybrid clouds can be more challenging to set up and manage, and users must deal with all the problems and worries associated with both public and private clouds.

Multi-cloud

Users can maintain availability and maximize cost savings by using multiple clouds. Multiple clouds are harder to manage because resources and services are rarely the same across clouds. More security lapses and compliance violations can occur with this cloud model than with single public cloud use. Given the size of cloud and big data projects, the extra complexity of multi-cloud deployments can make an effort more complicated than necessary.

Conclusion

The services — the analytical tools — make big data analytics possible, even though the underlying hardware receives the majority of attention and funding for significant data initiatives. The good news is that businesses looking to put significant data initiatives into practice don’t have to start from scratch. In addition to providing services and documentation, providers can also set up support and consulting to aid businesses in the optimization of their big DC in the projects.