What is Exploratory Data Analysis?

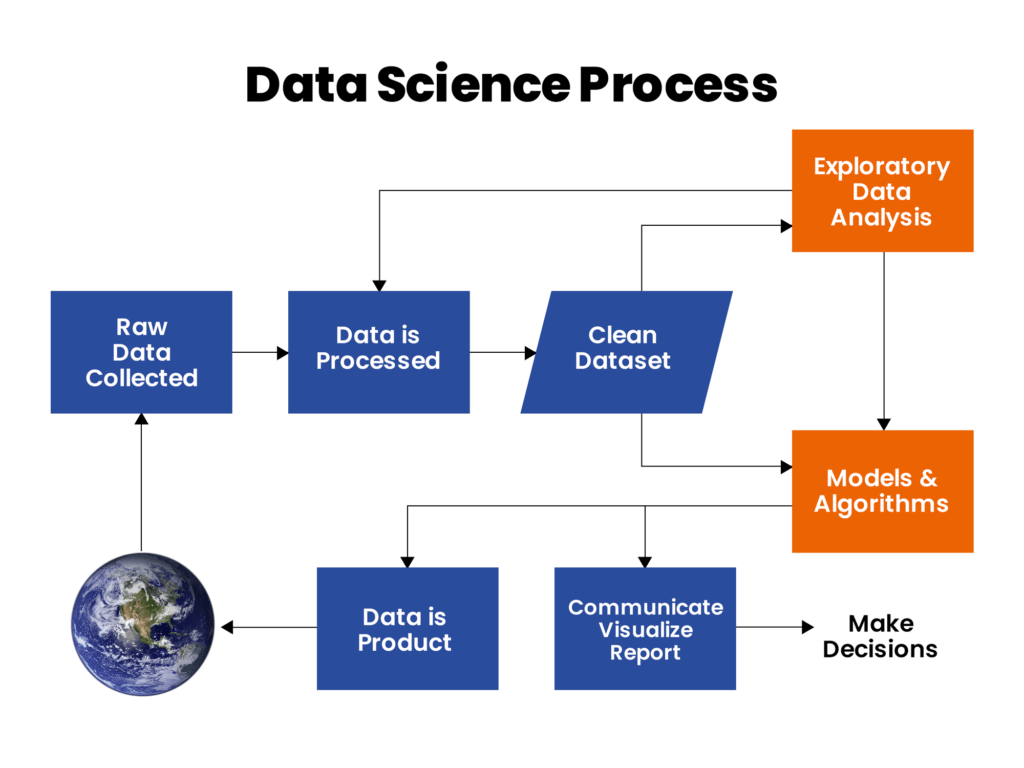

Exploratory Data Analysis (EDA) is an approach to analyzing and understanding data sets through statistical methods and visualizations. EDA aims to uncover patterns, relationships, and insights in the data that might not be immediately obvious. It is an iterative process that involves summarizing the main characteristics of a data set, creating visualizations to spot outliers and trends, and transforming the data to fit the requirements of more advanced models.

EDA is often the first step in the data analysis process and helps inform the development of more formal models. It is an important step in the data science workflow as it provides insights into the quality of the data and any potential issues that need to be addressed before building more advanced models. EDA is typically done using software tools such as R or Python, with popular libraries such as pandas, matplotlib, and seaborn.

Exploratory Data Analysis Steps

Exploratory Data Analysis (EDA) plays a crucial role in data analytics and model-building. The following are the reasons for its importance and relevance:

- Understanding the Data: EDA helps understand the data structure, variables, and relationships. It provides insights into the nature of the data, including the presence of outliers, skewness, and other data characteristics.

- Data Cleaning: EDA helps to identify missing values, duplicate data, and other data quality issues. Cleaning the data is crucial for building accurate models, and EDA helps to identify areas that require cleaning.

- Data Visualization: EDA allows for data visualization in various ways. This helps to understand the data’s distribution, relationships, and patterns. Graphical representation of the data is a key component of EDA and provides insights into the data that might not be possible through other methods.

- Feature Selection: EDA helps to identify the important features that should be used in the model-building process. It can reduce the number of features in the data and simplify the model, thus reducing the risk of overfitting.

- Model Validation: EDA provides the basis for validating the model by allowing us to check if the model is correctly capturing the relationships in the data. It helps to identify the areas where the model is not working as expected and provides insights into how to improve the model.

- Communication: EDA provides a way to communicate the insights and findings of the data analysis to stakeholders. By visualizing the data and summarizing the key insights, EDA provides a way to communicate complex data in a way that is easily understood by others.

Overall, EDA is a crucial step in the data analytics and model-building process. It provides a foundation for building accurate models that can be used to make informed decisions.

Types of Exploratory Data Analysis

There are several types of exploratory data analysis (EDA)

- Univariate Non-graphical

- Univariate graphical

- Multivariate Non-graphical

- Multivariate graphical

Univariate Non-graphical

Univariate Non-graphical Exploratory Data Analysis (EDA) involves analyzing each variable in a dataset individually, without visualizations. The goal of univariate non-graphical EDA is to understand the distribution and characteristics of a single variable in the data.

Some common techniques used in univariate non-graphical EDA include:

- Descriptive Statistics: This involves calculating measures such as mean, median, mode, standard deviation, quartiles, and range to summarize the main characteristics of the data.

- Frequency Tables: This involves counting the number of occurrences of each unique value in a variable and creating a frequency table to summarize the data distribution.

- Percentiles: This involves dividing the data into 100 equal parts and calculating the value of each part, known as a percentile, which can be used to summarize the data distribution.

- Z-Scores: This involves standardizing the values in a variable by subtracting the mean and dividing by the standard deviation, which can be used to identify outliers in the data.

Univariate Graphical

Univariate Graphical Exploratory Data Analysis (EDA) involves visualizations to analyze each variable in a dataset individually. The goal of univariate graphical EDA is to understand the distribution and characteristics of a single variable in the data.

Some common visualizations used in univariate graphical EDA include:

- Histograms: A histogram is a bar graph representing a single variable’s distribution by dividing the data into intervals (or bins) and counting the number of observations in each bin. Histograms can help identify the distribution’s shape and highlight skewness, outliers, and patterns in the data.

- Box Plots: A box plot (or box-and-whisker plot) is a visualization that represents the distribution of a single variable by plotting the median, quartiles, and outliers in the data. Box plots can provide a compact distribution summary and highlight outliers and skewness in the data.

- Density Plots: A density plot is a smoothed histogram that estimates the probability density function of a single variable. Density plots can be used to visualize the distribution and highlight the shape of the data, including skewness and multi-modality.

- Violin Plots: A violin plot combines a box plot and a density plot that provides a more detailed visualization of the distribution of a single variable. Violin plots can provide information on the data’s shape, spread, and skewness, as well as the presence of multiple modes.

Multivariate Non-Graphical

Multivariate Non-Graphical Exploratory Data Analysis (EDA) involves analyzing the relationship between multiple variables in a dataset without visualizations. The goal of multivariate non-graphical EDA is to understand how different variables in the data interact and influence one another.

Some common techniques used in multivariate non-graphical EDA include:

- Correlation Matrix: A correlation matrix is a table that shows the correlation between all pairs of variables in the data. Correlation measures the linear relationship between two variables and can be used to identify strongly or weakly associated variables.

- Covariance Matrix: A covariance matrix is a table that shows the covariance between all pairs of variables in the data. Covariance measures the joint variability between two variables and can be used to identify variables that change together.

- Regression Analysis: Regression analysis is a statistical method that involves fitting a line or curve to the data to model the relationship between a dependent variable and one or more independent variables. Regression analysis can estimate the strength and direction of the relationship between variables and make predictions about future values based on past observations.

- ANOVA: Analysis of Variance (ANOVA) is a statistical method that involves comparing the means of two or more data groups to determine if there is a significant difference between the groups. ANOVA can be used to identify variables that significantly affect the outcome and test hypotheses about the relationship between variables.

Multivariate Graphical

Multivariate Graphical Exploratory Data Analysis (EDA) involves analyzing the relationship between multiple variables in a dataset using visualizations. The goal of multivariate graphical EDA is to understand how different variables in the data interact and influence one another and to identify patterns and relationships that can inform further analysis and modeling.

Some common visualizations used in multivariate graphical EDA include:

- Scatter Plots: A scatter plot is a visualization that plots the values of two variables on a graph, with one variable on the x-axis and the other on the y-axis. Scatter plots can be used to visualize the relationship between variables, including the strength, direction, and shape of the relationship, as well as the presence of outliers and skewness.

- Pair Plots: A pair plot (or scatter plot matrix) is a visualization that plots all possible combinations of two variables in the data in a grid of scatter plots. Pair plots can provide a comprehensive overview of the relationships between all variables in the data, including the presence of correlations, outliers, and skewness.

- Heat Maps: A heat map is a visualization that represents the values of two or more variables in a grid of cells, with the color of each cell representing the value of a third variable. Heat maps can visualize the relationship between variables and identify patterns and correlations in the data.

- Parallel Coordinates: A parallel coordinates plot is a visualization that plots multiple variables in parallel lines on a graph, with each line representing an observation in the data. Parallel coordinates plots can be used to visualize the relationship between variables and to identify outliers and skewness in the data.

Exploratory Data Analysis Tools

Several tools can be used for Exploratory Data Analysis in data science, including:

- R: R is a widely used programming language for statistical computing and data analysis. It has many packages and libraries designed explicitly for EDA, including the “tidyverse” collection of packages, which provides a comprehensive suite of tools for data manipulation, visualization, and analysis.

- Python: Python is another widely used programming language for data science, with several libraries and packages specifically designed for EDA. Popular Python libraries for EDA include Pandas, Seaborn, Matplotlib, and Plotly, which provide data manipulation, visualization, and analysis tools.

- Tableau: Tableau is a data visualization and business intelligence tool that provides an interactive and user-friendly interface for EDA. Tableau allows users to easily explore and visualize their data using a drag-and-drop interface and provides a wide range of visualizations and charts to support EDA.

- QlikView: QlikView is a business intelligence and data visualization tool that provides interactive visualizations and dashboards for EDA. QlikView allows users to explore and analyze their data efficiently and offers a wide range of visualizations and charts to support EDA.

EDA in Python

Here is an example of how Exploratory Data Analysis (EDA) can be performed using Python:

Import libraries

To start, you must import the necessary libraries, such as Pandas and Matplotlib.

import pandas as pd

import matplotlib.pyplot as plt

Load the data

Next, you will need to load the data into a Pandas DataFrame. For this example, we’ll use the famous Iris dataset:

iris = pd.read_csv('iris.csv')

Data Exploration

To explore the data, you can use various functions and methods provided by Pandas. For example, to see the first few rows of the data, you can use the head() method:

print(iris.head())

Summary Statistics

To get summary statistics of the data, you can use the describe() method:

print(iris.describe())

Univariate Analysis

To perform univariate analysis, you can plot histograms and box plots using the Matplotlib library. For example, to plot the histogram of the Sepal Length column, you can use the following code:

plt.hist(iris['Sepal Length'])

plt.xlabel('Sepal Length')

plt.ylabel('Frequency')

plt.title('Histogram of Sepal Length')

plt.show()

Multivariate Analysis

You can use scatter plots and pair plots to perform multivariate analysis. For example, to plot a scatter plot between Sepal Length and Sepal Width, you can use the following code:

plt.scatter(iris['Sepal Length'], iris['Sepal Width'])

plt.xlabel('Sepal Length')

plt.ylabel('Sepal Width')

plt.title('Scatter Plot of Sepal Length vs Sepal Width')

plt.show()

Here is a formula in Python that can provide missing values in each column, outliers based on IQR in each numerical column, the data type of each column, class of each column, mean, median, and mode in each numerical column, and mode in each categorical column:

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import numpy as np

# Load the data into a Pandas DataFrame

df = pd.read_csv("data.csv")

# Check for missing values in each column

print("Missing values in each column:")

missing_values = df.isna().sum()

print(missing_values)

# Outlier detection using IQR in each numerical column

numerical_columns = df.select_dtypes(include=[np.number]).columns

for col in numerical_columns:

Q1 = df[col].quantile(0.25)

Q3 = df[col].quantile(0.75)

IQR = Q3 - Q1

outliers = df[(df[col] < (Q1 - 1.5 * IQR)) | (df[col] > (Q3 + 1.5 * IQR))]

print("Outliers in column {} based on IQR:".format(col))

print(outliers)

# Get data type of each column

print("Data type of each column:")

print(df.dtypes)

# Get class of each column

print("Class of each column:")

print(df.groupby(df.dtypes, axis=1).size())

# Get mean, median, and mode of each numerical column

print("Mean of each numerical column:")

print(df.mean(numeric_only=True))

print("Median of each numerical column:")

print(df.median(numeric_only=True))

print("Mode of each numerical column:")

print(df.mode(numeric_only=True))

# Get mode of each categorical column

print("Mode of each categorical column:")

categorical_columns = df.select_dtypes(exclude=[np.number]).columns

for col in categorical_columns:

print("Mode of column {}:".format(col))

print(df[col].mode().values[0])

You May also Like to know more about: Exploratory Data Analysis using Python

EDA in R

Exploratory Data Analysis (EDA) is a crucial step in the data science process that involves analyzing and summarizing data to gain insights and identify patterns. Here is how EDA can be performed in R:

Load the data

To start, you will need to load the data into R. For this example, we’ll use the famous iris dataset that is included in the datasets library:

data("iris")

Summary Statistics

To get summary statistics of the data, you can use the summary() function:

summary(iris)

Data Exploration

To explore the data, you can use various functions and methods provided by R. For example, to see the first few rows of the data, you can use the head() function:

head(iris)

Univariate Analysis

To perform univariate analysis, you can plot histograms and box plots using the ggplot2 library. For example, to plot the histogram of the Sepal.Length column, you can use the following code:

library(ggplot2)

ggplot(iris, aes(x=Sepal.Length)) +

geom_histogram(binwidth=0.5, fill="blue", color="black") +

xlab("Sepal Length") + ylab("Frequency") +

ggtitle("Histogram of Sepal Length")

Multivariate Analysis

To perform multivariate analysis, you can plot to scatter plots, pair plots, and more using the ggplot2 library. For example, to plot a scatter plot of Sepal.Length vs. Sepal.Width, you can use the following code:

ggplot(iris, aes(x=Sepal.Length, y=Sepal.Width)) +

geom_point(color="blue") +

xlab("Sepal Length") + ylab("Sepal Width") +

ggtitle("Scatter Plot of Sepal Length vs Sepal Width")Here is a formula in R that can provide missing values in each column, outliers based on IQR in each numerical column, the data type of each column, class of each column, mean, median, and mode in each numerical column, and mode in each categorical column:

library(tidyverse)

# Load the data into a data frame

df <- read.csv("data.csv")

# Check for missing values in each column

print("Missing values in each column:")

missing_values <- sapply(df, function(x) sum(is.na(x)))

print(missing_values)

# Outlier detection using IQR in each numerical column

numerical_columns <- df %>% select_if(is.numeric)

for (col in names(numerical_columns)) {

Q1 <- quantile(df[, col], probs = 0.25)

Q3 <- quantile(df[, col], probs = 0.75)

IQR <- Q3 - Q1

outliers <- df[df[, col] < (Q1 - 1.5 * IQR) | df[, col] > (Q3 + 1.5 * IQR), ]

print(paste("Outliers in column", col, "based on IQR:"))

print(outliers)

}

# Get data type of each column

print("Data type of each column:")

print(sapply(df, class))

# Get mean, median, and mode of each numerical column

print("Mean of each numerical column:")

print(colMeans(numerical_columns))

print("Median of each numerical column:")

print(apply(numerical_columns, 2, median))

print("Mode of each numerical column:")

for (col in names(numerical_columns)) {

print(paste("Mode of column", col, ":"))

print(as.data.frame(table(numerical_columns[, col]))[which.max(table(numerical_columns[, col])), ])

}

# Get mode of each categorical column

print("Mode of each categorical column:")

categorical_columns <- df %>% select_if(~ !is.numeric(.))

for (col in names(categorical_columns)) {

print(paste("Mode of column", col, ":"))

print(as.data.frame(table(categorical_columns[, col]))[which.max(table(categorical_columns[, col])), ])

}

5-point summary in EDA

The 5-point summary can be used to identify the data distribution, including its shape, skewness, and outliers. It can also provide information on the central tendency and variability of the data. This information can be used to make informed decisions about feature scaling, data pre-processing, and modeling.

The 5-point summary in Exploratory Data Analysis (EDA) is a set of descriptive statistics that provides a comprehensive dataset overview. It includes the following five values:

- Minimum: The minimum value in the dataset, which represents the smallest observation.

- First Quartile (Q1 or 25th percentile): The value that separates the lowest 25% of the observations from the rest of the dataset.

- Median (50th percentile): The value that separates the lowest 50% of the observations from the highest 50% of the observations.

- Third Quartile (Q3 or 75th percentile): The value that separates the lowest 75% of the observations from the highest 25% of the observations.

- Maximum: The maximum value in the dataset represents the largest observation.

Python formula for calculating the 5-point summary

import numpy as np

import pandas as pd

def five_point_summary_with_std(data):

summary = {}

summary['Minimum'] = np.min(data)

summary['First Quartile (Q1)'] = np.percentile(data, 25)

summary['Median (Q2)'] = np.median(data)

summary['Third Quartile (Q3)'] = np.percentile(data, 75)

summary['Maximum'] = np.max(data)

summary['Standard Deviation'] = np.std(data)

return pd.Series(summary)

# Example usage

data = [1, 2, 3, 4, 5, 6, 7, 8, 9, 10]

five_point_summary_with_std(data)

This will return a series object with the 5-point summary and standard deviation values for the input data:

Minimum 1.0

First Quartile (Q1) 3.0

Median (Q2) 5.5

Third Quartile (Q3) 8.0

Maximum 10.0

Standard Deviation 2.8722813232690143

dtype: float64

R formula for calculating the 5-point summary

five_point_summary_with_std <- function(data) {

summary <- c(

Minimum = min(data),

First_Quartile = quantile(data, 0.25),

Median = median(data),

Third_Quartile = quantile(data, 0.75),

Maximum = max(data),

Standard_Deviation = sd(data)

)

return(summary)

}

# Example usage

data <- c(1, 2, 3, 4, 5, 6, 7, 8, 9, 10)

five_point_summary_with_std(data)

This will return a named vector with the 5-point summary and standard deviation values for the input data:

Minimum 1

First_Quartile 3

Median 5.5

Third_Quartile 8

Maximum 10

Standard_Deviation 2.8722813

Very detailed blog , Got to understand the topic in depth.

The blog covers a wide range of EDA types, tools, and steps, providing readers with a comprehensive understanding of the entire process. What I appreciated most was the writer’s use of real-world examples to illustrate each step, making it easier to see how EDA can be applied in different scenarios.

The blog is also very well-researched, with up-to-date information and insights into the latest tools and techniques used in EDA.Overall, I highly recommend this science blog to anyone interested in exploring the world of EDA. It’s informative, engaging, and provides readers with the tools and knowledge they need to apply EDA in their own work.

Loved the background research that went into this!

Good research as well as very informative

Insightful !!

Really very insightful and loved the background research and work that went into making this article.

Insightful and we’ll articulated!

Insightful!