Introduction

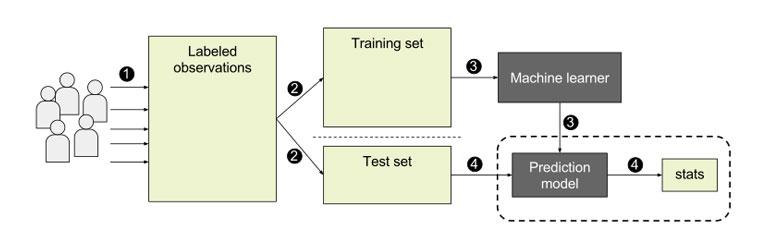

Boosting methods are algorithms that improve the accuracy of a Machine Learning model by reducing the learning process’s bias. Examples of boosting algorithms include the XGBoost, GradientBoost, and BrownBoost. Each one uses different weighting methods for training examples. Boosting algorithms can be used in several contexts, including decision trees, regression, and classification.

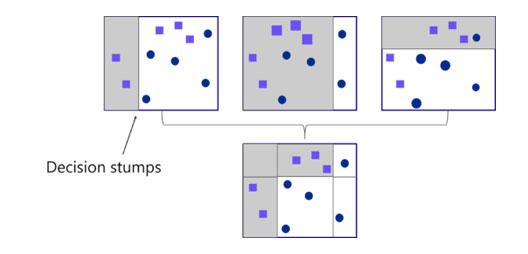

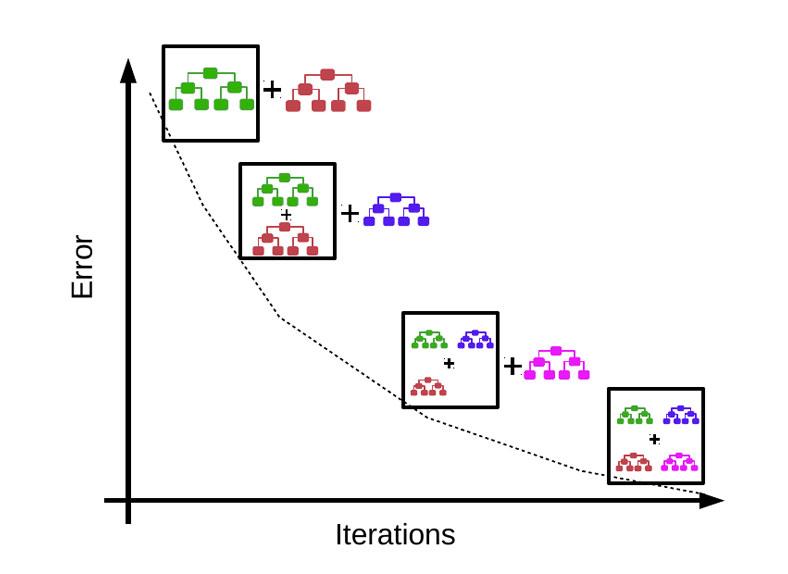

In general, boosting methods are useful for improving weak learner algorithms. They combine weak rules based on a different distribution to produce a stronger one. The goal is to make each prediction more accurate than the weak learner’s. The process is iterative, and the algorithm looks for misclassified data points and adjusts weights accordingly. Iteration of the boosting method will continue until the target accuracy is reached.

Boosting methods are often used for categorization tasks, where classification speed is crucial. While standard kernel machines are slow, boosting classifiers can perform nearly as well as kernel methods. These methods are considered standard and are widely used for many applications. They combine weak learner models to build strong classifiers. They also require less training data and fewer features than other methods.

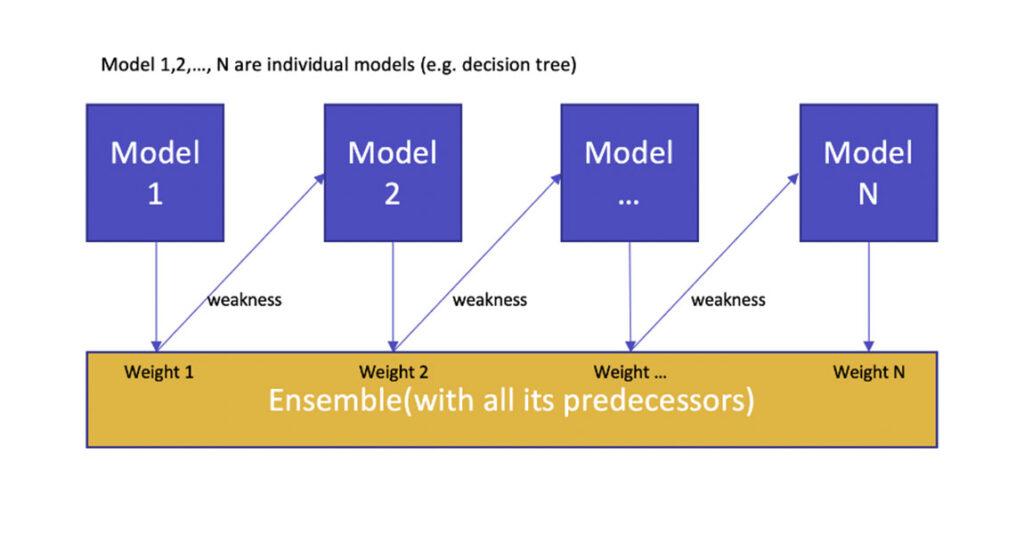

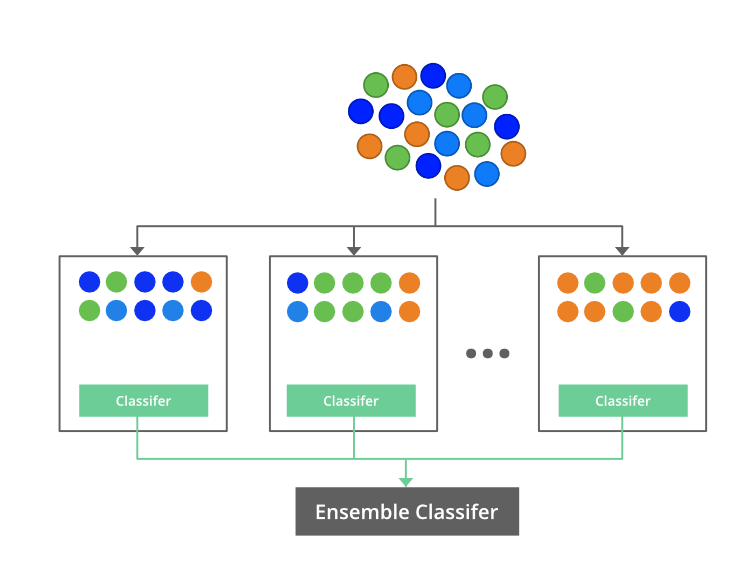

Boosting methods are commonly used in ensemble learning, a type of machine learning algorithm. This technique involves fitting weak learners sequentially to a dataset and combining predictions from each weak learner. This process can be used to learn how to make predictions from difficult classes.

What is Boosting Algorithm?

What is boosting algorithm is answered while understanding the meaning of boosting—boosting means to help something to encourage or improve. Boosting algorithm means the set of algorithms aiming to convert the weak learner into strong learners. IT is done to boost the model’s accuracy, reducing errors due to the algorithm.

Now that we have learned about what is boosting algorithm here are some of the features of boosting algorithm –

- It is an ensemble model. It can describe or predict, which is quite natural.

- Boosting is a generalized model, not a specific model.

- The power of prediction is more than the decision tree or bragging

- Scaling up boosting is difficult as it is a prediction model, and every estimation is based on the prediction.

- It chooses the features essentially, which is an advantage of this algorithm.

How does Boosting Algorithm works in Machine Learning?

The basic methodology under boosting algorithms in machine learning is that various weak learners are bought together to form one strict rule. These weak rules are developed by adopting base Machine learning on the various distribution of data sets. Every time the base machine learning is applied, a new weak rule emerges, known as an iterative process. After applying many iterative processes, there are various weak rules achieved. Now the boosting algorithm in machine learning combines all the weak prediction rules to form an individual strong prediction rule.

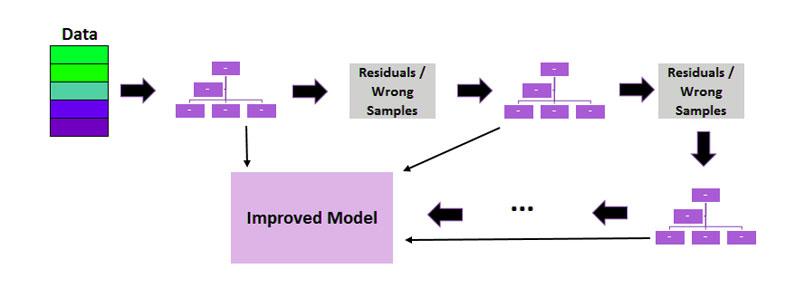

What is the process of choosing different distributions in each round?

Step 1 – the base learner takes the entire distribution and assigns them equal weight to individual observation.

Step 2 – for instance, an error occurred to the initial base learning algorithm. Then we pay greater attention to the observation having prediction of error. Then we move on to the following base learning algorithm.

Step 3– keep continuing step 2 until the base learning algorithm has reached its limit f of the highest achievement.

After step 3, all the weak learners combined and made a single stronger learner, which eventually has an improved prediction model. Boosting algorithms in machine learning helps focus the weaker rules with high errors.

Types Of Boosting Methods in Machine Learning

Boosting is a type of ensemble learning that uses various models to develop final outcomes or presumptions. There are three types of boosting methods in machine learning.

- AdaBoost ( Adaptive Boosting) algorithm

- Gradient Boosting Algorithm

- XG boost Algorithm

AdaBoost ( Adaptive Boosting) Algorithm

the AdaBoost Algorithm works in a way that merges the weak leaner base on a weightage into a strong learner. In the first iteration, every data set is given equal weight, and the procedure of predicting starts. If there is an error in the prediction, more weight s given to that particular data set. The procedure keeps on going till the time accuracy has been achieved. A strong base is created. We have learned what adaptive boosting is and it’s working on it.

XG boost Algorithm

The complete form of the XG boosting algorithm is an extreme gradient boosting algorithm. XG is an advanced level of gradient boosting. It is used for high computability and better performance. Here are some of the features of the XG boost Algorithm

- Cross – Validation enables users to use the cross-validation of the XG boosting process at every iteration. Getting a definite number of boosting iterations in a single run is more accessible.

- Distributed computing – for the training of the massive model, distributed computing has functioned.

- Parallel Processing is used for their construction, using the CPU’s core while training.

- Cache expansion- it contributes to the algorithm for higher execution speed.

Gradient Boosting Algorithm

We have already learned about the gradient boosting algorithm and its work. The algorithm’s goal is to define the loss function and lessen the function. MSE is a Mean Square error that could be used as a loss function.

LOSS = ∑ (ý – þ)2

Where in

- Ý = the target value of function

- þ = the prediction value of function

The square of the deviations & then the addition of those squares is known as the loss function The goal is to reduce the loss function to near zero. To minimize the loss function. use gradient descent & regularise revising the prediction values. Therefore, we need to determine where the MSE is at its minimum. By using the formula, minimum MSE can be derived.

Hence, the real purpose is to reduce the sum of residuals as much as possible.

Gradient Boosting Model Explained

The gradient boosting model is a boosting algorithm in machine learning. It is a powerful and impactful technique to develop predictive models. The gradient boosting model finds out the error left by the previous model. The gradient boosting model works with difficult and significant decisions. The gradient boosting model is used in the processes of regression and classification.

Gradient descent is a first-order optimization algorithm that finds the local minimum of a function. Gradient boosting orderly runs several models. So it can fit novel models to estimate the result better.

How does the gradient boosting model work?

There are mainly three main components used for the working of gradient boosting-

- A loss function – a loss function is a primary and fundamental objective. Optimization of the loss function is done. Loss of function advances with various types of problems. Individuals can explain their standard function, but it should be differentiable. The primary thing about gradient boosting is that it does not need a new boosting algorithm for every loss function in question.

- Weak learner – weak learners are for making predictions. A decision tree is a weak learner. Specific regression trees are for actual output values used for splits.

- An additional model – no modification is needed in the previous and existing trees in the model. After a time, there is a requirement for more trees in the model. the gradient boosting model lessens the chances of losses. It also lessens the sets of parameters and minimizes the error of upgrading weights only after calculating the error.

Gradient Boosting Machine Python

Gradient boosting machine python builds an advanced base learner that can respond with the loss function’s negative gradient and is associated with the entire system. In Python, Gradient Tree Boosting is used, also known as GBRT. Use it for classification as well as regression problems.

Here is an example of Gradient Tree Boosting in Python:

from sklearn.ensemble import GradientBoostingRegressor

model = GradientBoostingRegressor(n_estimators=3,learning_rate=1)

model.fit(X,Y)

# for classification

from sklearn.ensemble import GradientBoostingClassifier

model = GradientBoostingClassifier()

model.fit(X,Y)

Gradient Boosting v. Deep Learning

The mentioned two terminologies are part of Artificial intelligence and machine learning. The model of deep learning and gradient boosting is in credit risk management.

- Gradient boosting is more potent than deep learning

- Gradient boosting also is more speedy due to the less requirement for computation.

Conclusion

Boosting algorithm in machine learning is an easy-to-read algorithm. The precision og the boosting is also much easier ot handle. The boosting efficiency is at the top due to its prediction capabilities and clone methods. Boosting cannot handle outliers. The boosting methods in machine learning have the power of prediction. Gradient boosting is better than deep learning, which makes boosting algorithms powerful. Many machine learning researchers use boosting algorithms to improve the accuracy of the machine learning models.